Siri just turned six years old. Alexa just turned three. If we can ask our phones for the weather in Albuquerque and compel a plastic cylinder in our living rooms to read the Washington Post out loud, why are we still transcribing interviews by hand?

Well, it turns out we don’t really have to. Automatic transcription tools have been on the market for a while now, and they’re finally getting good. It now takes just a few minutes, and a few dollars, to upload audio or video to a site and receive a fairly comprehensive transcript.

But, like all tools, some are better than others. We tested (or tried to test — more on that later) eight of the most popular transcription tools aimed at journalists, including Dragon Dictation, Happy Scribe, oTranscribe, Recordly, Rev, Sonix, Trint and YouTube. We ran each tool through a variety of real-world scenarios, experimenting with how each one fared against a journalist’s typical use.

Though none of the tools were perfect, one edged out the others as the best in the category.

A combination of accuracy, features and ease of use make Trint the best choice for automatic transcription for journalists. Though it wasn’t the most accurate, most feature- rich or the cheapest tool we tried, its transcript editing tools and ability to fit a little more seamlessly into a journalist’s workflow help it to edge out its competitors. Read on to see why.

As you’ll see, the accuracy rates of these tools are low. That’s because we tried our darndest to confuse them.

First, to reflect a wide range of people, voices and accents, we recorded our sample audio with four participants. They included:

Kristen joined us via Google Hangouts/YouTube Live (disclosure: a grant from Google News Lab partially funds my position), which most automatic transcription tools openly warn against. Audio from a phone or video chat seems to be universally difficult for them to handle.

To torture the algorithms even more, we also read passages at a much faster pace than we usually speak, Dulce and Alexios spoke a variety of foreign languages (Italian, Spanish, French and Greek), we uttered as many proper nouns as possible (Apalachicola, Michael Oreskes and various Greek islands, to name a few), got creative with Urban Dictionary (a portmanteau of Paul Manafort and a crude word describing the state of his legal situation) and talked over each other with some frequency.

We recorded our 14-minute test in Poynter’s webinar studio and were interrupted by the sound of at least one loud plane overhead (there’s an airport a few blocks away), an emergency vehicle and the clamoring of Kristen’s phone.

We recorded the audio three ways:

We then uploaded the audio to each tool and kept track of how long each one took to transcribe. We normalized the resulting transcripts using Microsoft Word, removing timestamps and making sure speaker names were congruent. As a control, I transcribed the audio myself (using oTranscribe) and then listened through several times to check for total accuracy. We also tried Rev, a paid service that uses human transcribers rather than algorithms, to see how it stacked up.

We tested a variety of document comparison tools to see which worked best, settling on Copyscape as the most sound option. We compared the transcripts generated by the tools and services to the 100 percent correct one that I created with oTranscribe.

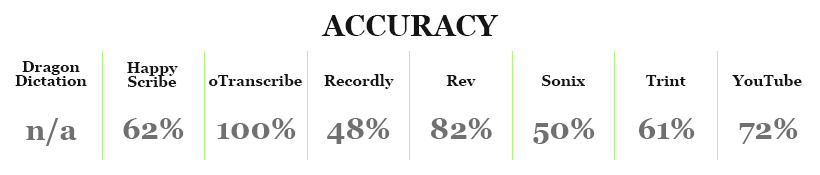

It seems that the people concerned about the artificial intelligence uprising have at least a few more years to prep, as the one human transcription service we tested beat automatic transcriptions by a wide margin.

Rev earned an 82 percent accuracy rating, with the human translator mostly failing to catch foreign languages (which, to be fair, is a separate service), a few proper nouns, some crosstalk, a few slang words and chunks of mumbling. Though the other tools mostly missed these things too, the human transcribers at Rev at least noted things like “[inaudible]” and “[crosstalk]” and “[foreign language],” which were useful placeholders for later corrections.

Even with the missing bits, the Rev transcript is entirely readable and coherent. If you weren’t around for the initial conversation, you could get the crux of what we were talking about just by reading it.

The next most accurate transcription was YouTube. The video hosting site automatically created captions for our YouTube live video that were 72 percent accurate. But even with just a 10 percent drop in overall quality, the transcript is significantly less readable than Rev’s because YouTube provides no punctuation or speaker segmentation. The captions exist as a massive block of text. Without pairing it with the audio, it would be nearly impossible for someone who wasn’t part of the conversation to understand our conversation.

There are other downsides to YouTube’s offerings, but we’ll talk about those when we get to features.

Happy Scribe proved to be the most accurate dedicated non-human transcription tool, with 62 percent accuracy in our experiment. The tool warns on its upload page to “avoid heavy background noise, “avoid heavy accents,” “avoid Skype and phone interviews,” and “keep the microphone close to the speaker,” all of which we dutifully ignored.

The transcript is close to accurate in places where I was speaking, especially when there wasn’t any crosstalk and I wasn’t using proper nouns, but struggled quite a bit with transcribing Dulce, Kristen and Alexios. It broke different speakers into new paragraphs in some places but failed in others. The overall transcript varies between entirely coherent in some places and bizarrely incoherent in others, such as when it transcribed Alexios saying “let me open Urban Dictionary and we can go through some of those” as “I mean even in the urban dictionary girls are close.”

Trint offered similar results, with 61 percent accuracy. It messed up in many of the same places, fumbling with accents, audio from YouTube and sections with crosstalk or quiet speaking. It didn’t mistranscribe in exactly the same ways as Happy Scribe, though. The Urban Dictionary sentence from above appeared as “I mean even in the urban dictionary we can go through those.”

Overall, Trint’s transcript is slightly easier to read than Happy Scribe’s because it does a better job of differentiating speakers and breaking them into new paragraphs. It’s not perfect, but it adds a lot of clarity when it works.

Sonix proved to be the next most accurate at 50 percent. Sonix worked slightly better than Happy Scribe and Trint when a single speaker was talking loudly. But any amount of crosstalk, background noise or even laughter — all things that will likely appear in any real- world use of the tool — seemed to confuse it more than the others. It captured the Urban Dictionary sentence as “to Open in the urban dictionary and we can go through some of those.”

Like the other tools, Sonix tried to break speakers into different paragraphs, but it seemed to be slightly worse at it.

Recordly was the least accurate of the automatic transcription tools, with 48 percent accuracy. It captured the Urban Dictionary sentence as “let me open that urban dictionary and we can. Go through some,” which isn’t bad, but that chunk of text isn’t representative of the rest of the transcript. Like YouTube, Recordly’s transcript is one giant block of text. Unlike YouTube, it does add punctuation, though less frequently and with lower accuracy than the other tools.

The Recordly transcript is the least helpful out of context.

Overall, the best transcript came from my own hand with oTranscribe. Rev turned out the best transcript that I didn’t have to transcribe myself. But this is a review of automatic transcription tools, and in that catogory Happy Scribe just barely edged out Trint to come out on top.

A few things seem to be automatic transcription tool industry standards. The ability to play back uploaded audio is an obvious one. All tools allow users to export transcripts in various formats.

The browser-based tools (which means all except for Recordly) offer a common suite, as well. All allow users to click various points in the text and skip directly to that part of the recording. They all have options to play back audio at a slower speed (with shortcut keys or by fiddling with settings), manually edit transcripts, upload video in addition to audio and store transcripts for later use.

Trint goes a step beyond and features a visualized waveform of the audio at the bottom of the transcript that users can skip through at will. It also has built-in tools to find and replace, highlight or strike out text. Users can add a roster of speakers to the tool and attach their name to each paragraph. It also has a handy feature to email a transcript with one click.

Sonix features all of these tools (except the interactive waveform) and a few more. The most helpful are “confidence colors,” which assigns different colors to words Sonix is less confident about; an audio quality rater, which tells you how confident Sonix is about its transcription; and automated speaker identification, a beta feature that attempts to identify various speakers and assign them IDs.

In our test, Sonix only identified two different speakers, so this tool needs some work, but it’s still tremendously helpful.

Recordly, the only app (iOS only) of the bunch, offers the fewest features. It’s pretty much a record-and-wait experience. The transcript is delivered in a format similar to Apple’s built-in notes app, with limited editing functionality. It also allows users to export the audio or text to another app.

Though Trint’s find-and-replace and waveform features are helpful when correcting transcripts, Sonix’s features add vital transparency to the transcription process. And though the speaker identification beta isn’t entirely reliable, it’s an ambitious tool that should only get better from here.

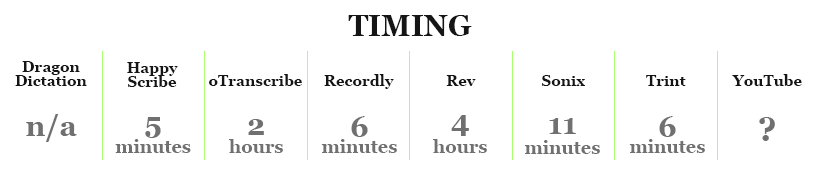

Here’s where automatic transcription shines. All of the tools provided a transcript in fewer minutes than the length of the audio file we submitted. The difference between Happy Scribe (five minutes), Trint (six minutes) and Recordly (six minutes) was negligible, but Sonix took a bit longer (11 minutes). (Update: A representative from Sonix reached out to say that its speed is in line with the other tools when the speaker identification feature is turned off.) In a real-world setting, this could be a crucial difference, especially with longer transcriptions.

YouTube is a bit of a mystery here. For this transcript, it took just a few minutes for the automated captions to appear. In past experiences, we’ve found that it the length of time it takes for them to appear can vary quite a bit. Since YouTube isn’t really meant to be used in this way, we’re not sure how long it typically takes.

It took about four hours and 15 minutes for Rev’s human transcribers to finish their transcript. It took about half of that for me to do it myself with oTranscribe, but not without several breaks, Spotify’s Deep Focus playlist and two gallons of coffee.

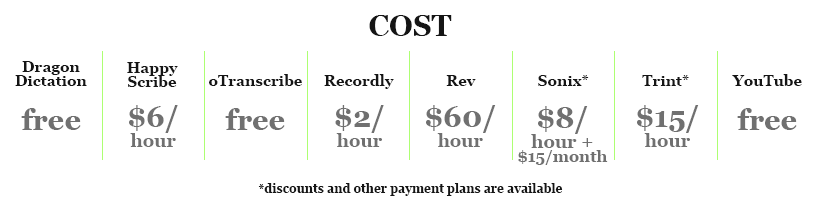

You can’t beat free (YouTube, oTranscribe), but when it comes to the dedicated automatic transcription tools, the cost varies widely. To determine the best price, you have to consider how often you’ll use the tool.

Sonix is the most expensive, with a base plan starting at $15 per month plus $8 for every hour of transcribed audio. But the tool offers a hefty 33 percent discount for paying annually instead of monthly.

Trint also offers plans starting at $15 an hour for pay-as-you upload transcriptions, or $40 a month for up to three hours worth of transcribed audio. Additional transcriptions cost just north of $13 per hour.

Happy Scribe costs a flat 10 cents per minute of uploaded audio. For less math-inclined types, that’s $6 per hour.

At a meager $2 per hour, with the first hour free, Recordly is by far the cheapest automatic transcription option.

Unsurprisingly, the human transcribers at Rev cost more than the other tools. Our 13- minute clip cost $14 to transcribe, and we paid $3.50 more for timestamps. Still, the cheap relative cost for the hours of work involved make us wonder where Rev’s transcribers are in the world and how well they’re being compensated.

None of these tools are difficult to use. You upload a file to each one (or record audio with it, in Recordly’s case) and, some time later, it sends you a link to an editable transcript.

Trint takes a big step beyond file uploads and accepts audio or video from a variety of sources, including Dropbox, Google Drive and FTP, and even allows users to just enter a link. This is unique among the tools we tested. Trint also asks a few helpful questions about background noise, cross-talk and more before the upload begins. It won’t fix a recording but is a helpful UX nod that teaches users how to record more transcribable audio in the future.

Happy Scribe, Rev, Sonix and Trint all send emails when the transcription is ready, so there’s no need to sit around and stare at the screen.

It’s not the cheapest, nor is it the most overall accurate overall transcription option available, but Trint squeaked out a win as the best all-around tool of those we tested.

The company, which is just over a year old and has received funding from the Knight Foundation (disclaimer: Poynter also receives funding from Knight) and Google’s Digital News Initiative, offers the best overall combination of functionality, accuracy and ease of use.

Only YouTube’s automatic captioning feature, which scored a 72 percent accuracy rate, fared significantly better than Trint at algorithm-led transcription. But YouTube isn’t designed for the type of transcribing that journalists need on a day-to-day basis and doesn’t offer any type of editing functionality.

Though the young startup Happy Scribe fared slightly better in our accuracy tests with a 62 percent rate, and comes in at about a third the price of Trint, it lacks many of the extra features that make Trint useful. The ability to upload from many sources, find and replace text and speaker identification are small but important workflow tools. If you’re just looking for a quick and dirty transcript, Happy Scribe may be the way to go.

And though it’s true its 61 percent is far from perfect, our tests were a little bit more difficult than most real-world uses.

We also tested Rev, a human translation service, and oTranscribe, which offers handy tools for journalists to transcribe audio on their own. At $1/minute of audio transcribed, we found Rev to be too expensive for the average journalist to use on a regular basis. And though oTranscribe was handy, it doesn’t solve the tedium and timesuck of transcribing.

With typical uses in mind, Trint is the best all-around automatic transcription tool for journalists.

Correction: We previously reported that Sonix does not offer find and replace tool, but it actually does. We apologize for missing it.

Learn more about journalism tools withTry This! — Tools for Journalism. Try This! is powered by Google News Lab. It is also supported by the American Press Institute and the John S. and James L. Knight Foundation

Originally published here.

We’re always happy to chat about the innovative tech we work on here at Trint, so don’t hesitate to get in touch with us for all media enquiries at victoria@trint.com.