With 2025 expected to be AI’s biggest year yet, questions around Generative AI continue to dominate the newsroom agenda. When should (and shouldn’t) we use it? How do we effectively mitigate the risks associated with GenAI? And should we build our own AI capabilities or buy ready-made off the shelf?

To that end, we’ve got five predictions for newsrooms in the year ahead - backed up by a brand new Trint study with Producers, Editors and Correspondents from 29 newsrooms globally - along with actions to help you safely navigate your next steps with GenAI.

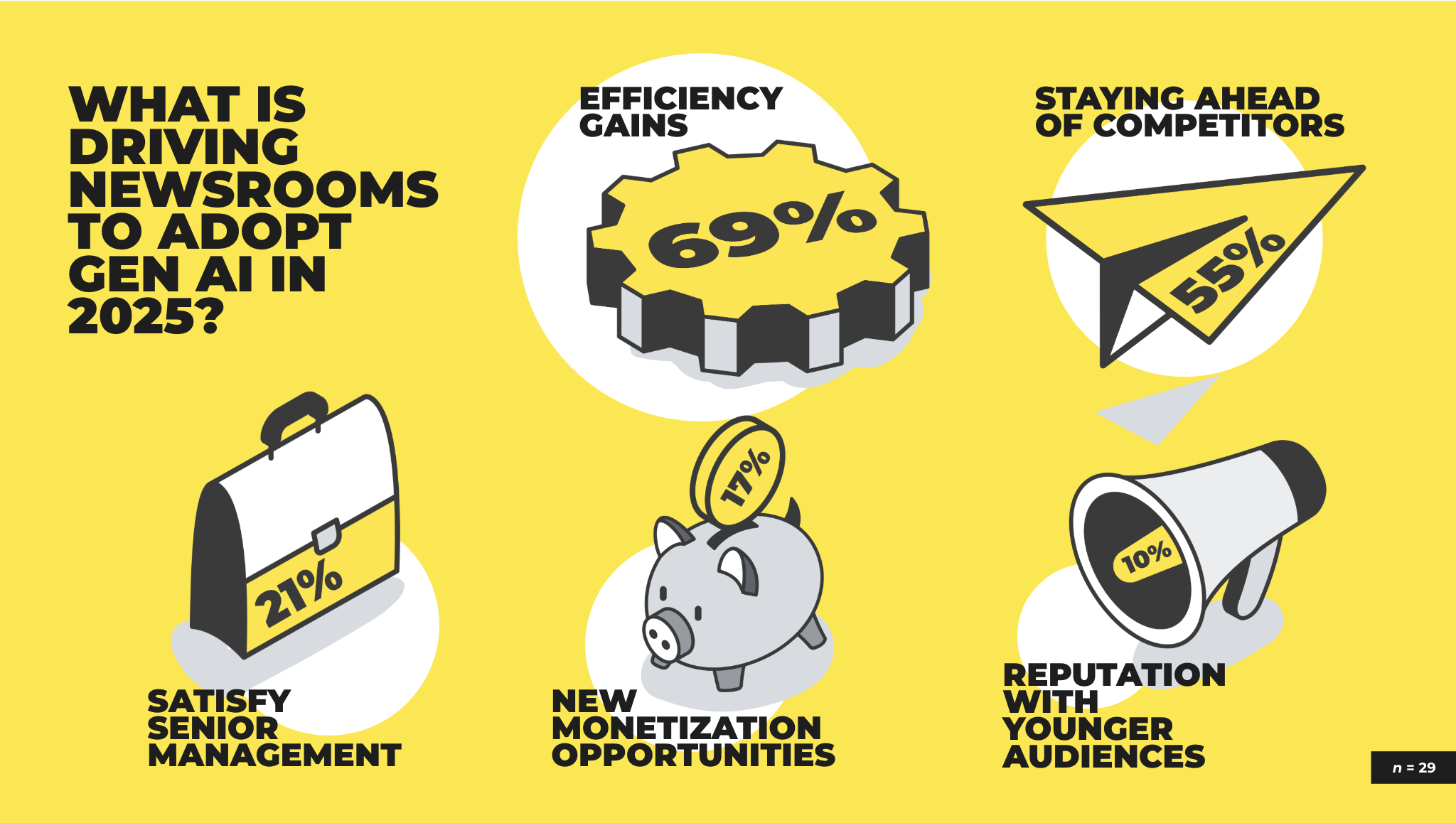

When it comes to what will drive GenAI adoption in 2025, newsroom executives seem to view AI through the lens of what it's already doing - but simply expect it to speed up workflows even more as a means of staying ahead of their rivals. Intriguingly, the community doesn't yet see GenAI as a means to serve audiences more effectively or make money (Figure 1). For 2025, newsrooms will focus their efforts on supporting the bottom line; not the top line.

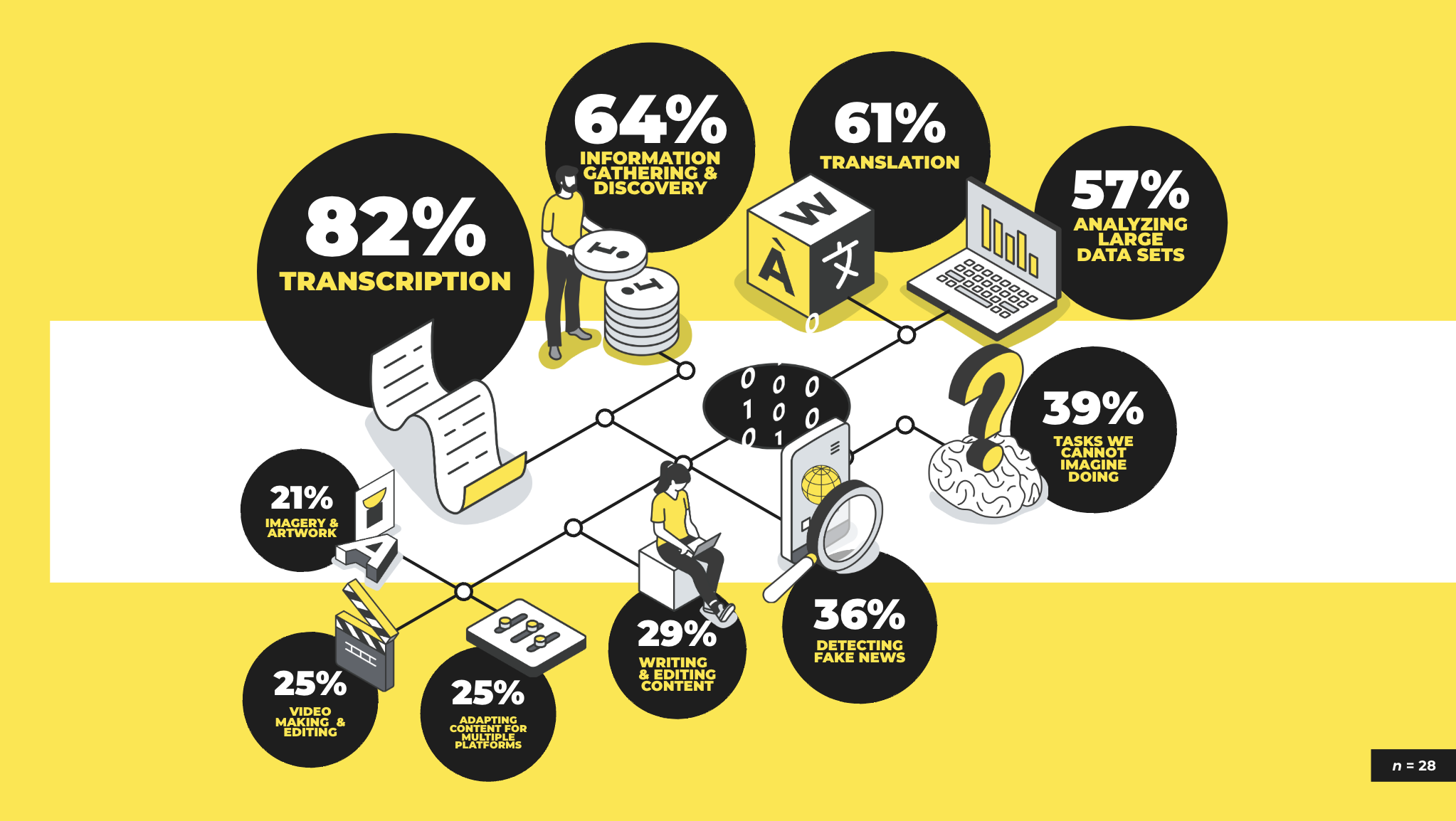

Newsrooms clearly see GenAI as a way to handle manual, laborious work. Tasks like transcription and translation, information gathering or making sense of large volumes of data are top of the to do list (Figure 2) i.e. the often so-called low value jobs that get in the way of the more ‘meaningful’ stuff! This validates the view above that GenAI’s purpose will be to drive efficiency gains.

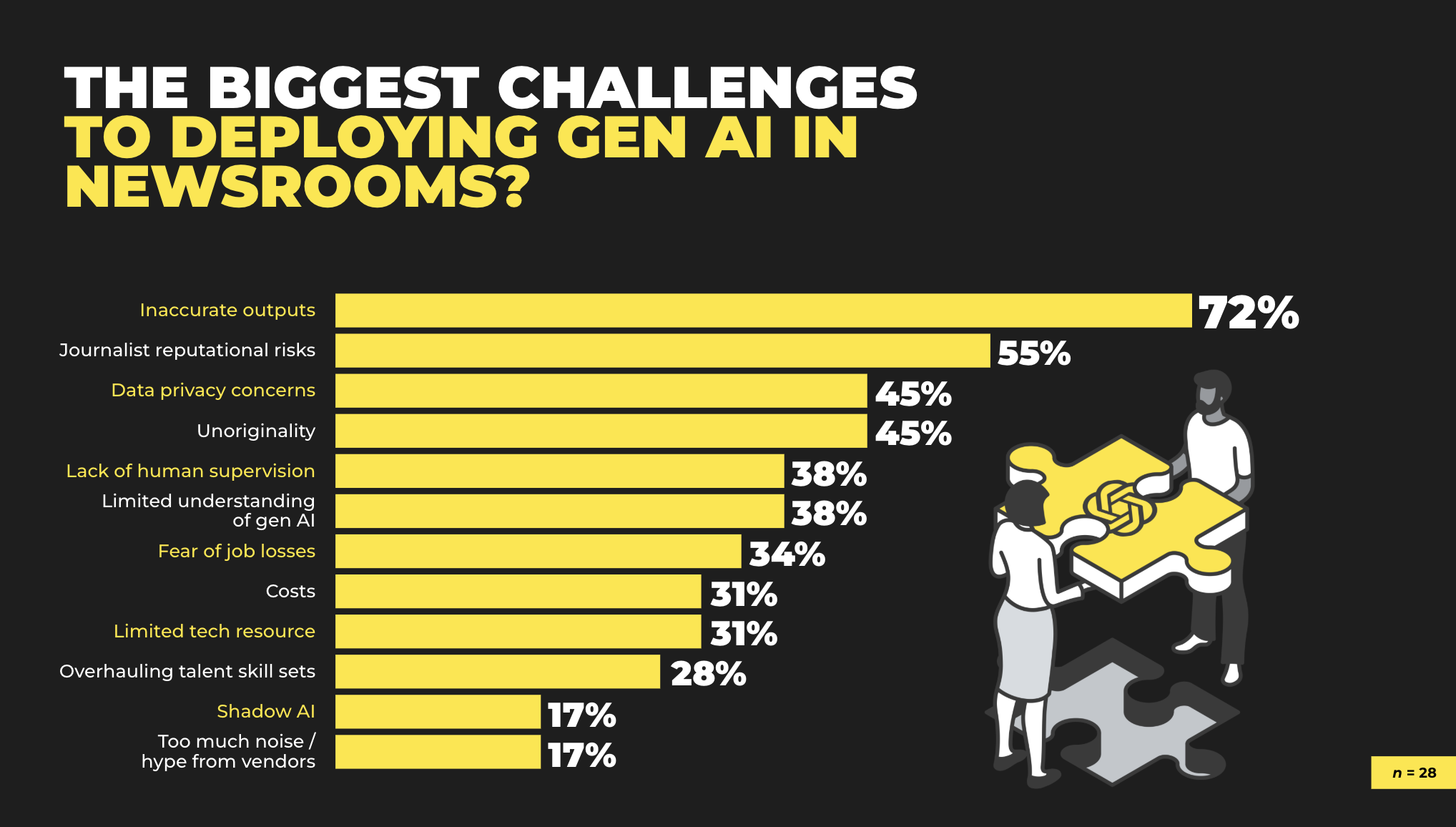

What we won’t expect to see this coming year is any significant shift towards using GenAI for creative work. Despite the publicity around AI chatbots and image generators, newsrooms look set to keep content writing, video production, imagery and artwork firmly in the hands of humans. The community will remain cautious around potential inaccuracies, hallucinations and unoriginality that might jeopardize the reputation of journalists - not to mention the newsroom’s brand (Figure 3).

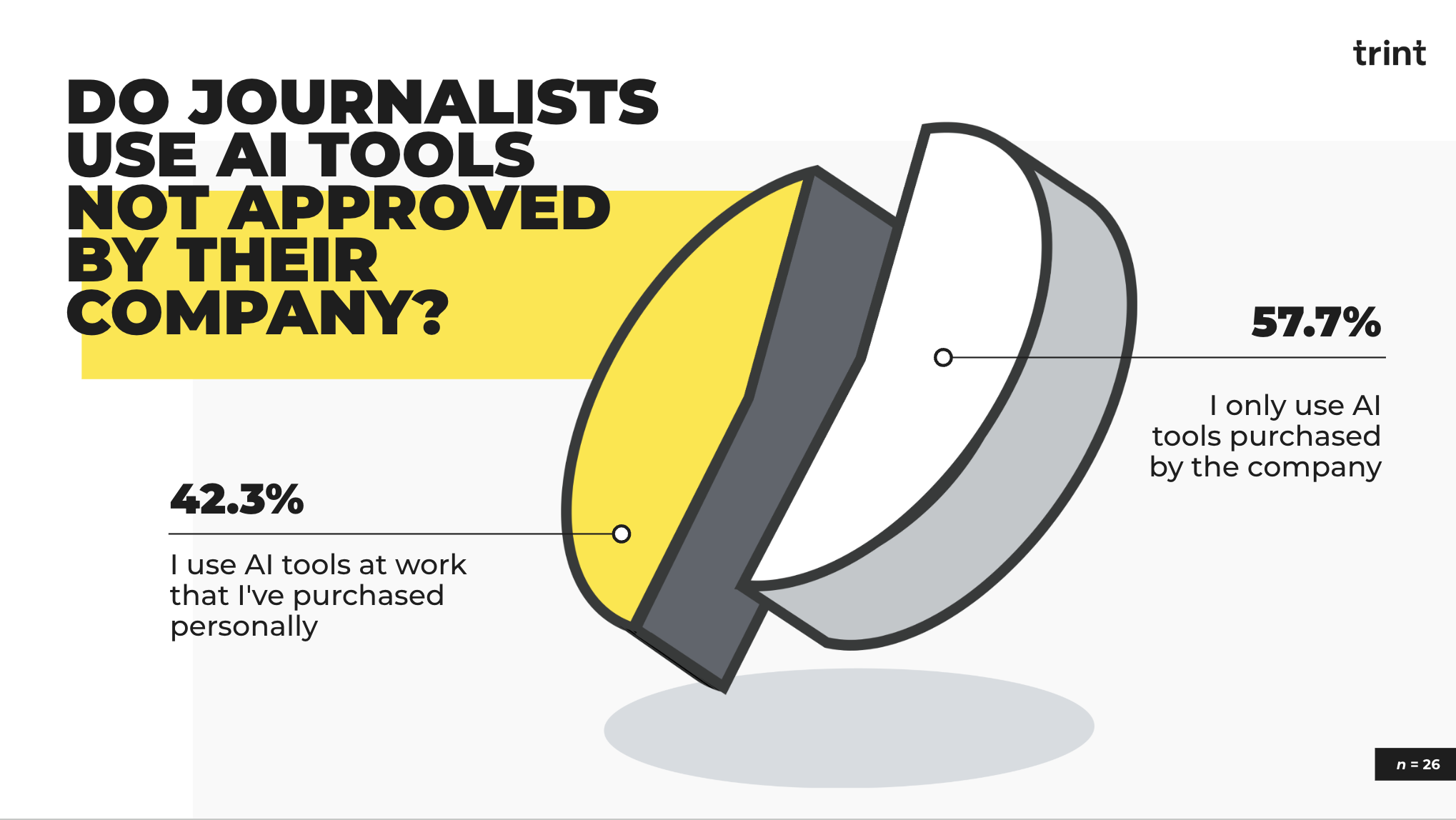

A fascinating finding from our study is that, while those we interviewed don’t see shadow AI - that is, the use of personally purchased AI tools without company approval - as a concern (Figure 3), what’s apparent on deeper inspection is that newsroom employees are in fact routinely using their own AI tools - with nearly half of our interviewees claiming not to stick to company-sanctioned AI solutions (Figure 4).

Although experimentation with AI tools isn’t necessarily a concern, the sense is that newsrooms don’t recognize the full implications of shadow AI, which can often open the gates to significant security, data privacy and regulatory incidents - not to mention the costs that come with any of the above! In 2025, the onus will be on the newsroom to work with their IT/InfoSec peers and vendors to educate employees on the risks and facilitate an environment that mitigates their employees’ preference for their own tools.

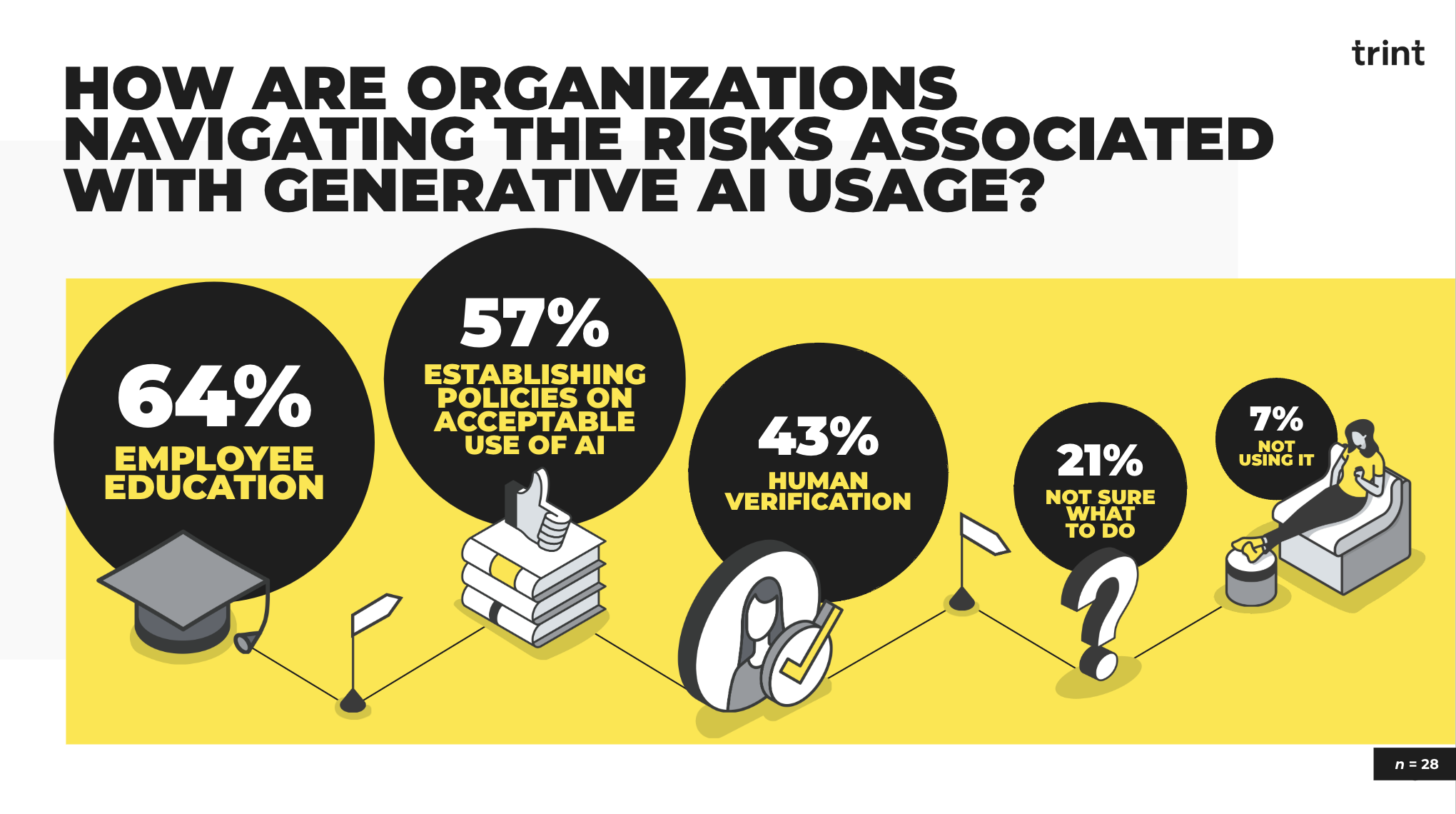

To mitigate the risks, newsrooms will bet big on educating employees around the use of GenAI, while pushing company-wide policies to safeguard responsible use. Keeping a human in the loop for verification and fact-checking purposes will also be at the core of newsrooms’ mitigation strategies this year (Figure 5).

Such courses of action suggest that newsrooms are putting the onus of responsible AI firmly on their own shoulders. Intriguingly, there’s little appetite to scrutinize technology vendors (18%), which makes sense given it can be a challenge to understand such complex topics as data sources or how algorithms learn.

However, from our point of view, we consider it necessary when customers ask questions to drill into our accuracy levels, how our algorithms are trained, or how we keep data private. We recommend that newsrooms continually ask these questions of any AI vendor and, if they’re not willing to offer comprehensive answers, you’ll need to think very carefully about putting your data in their hands.

In short, newsrooms shouldn’t be afraid to ask the important questions that reassure them that they can trust their vendor’s AI solution.

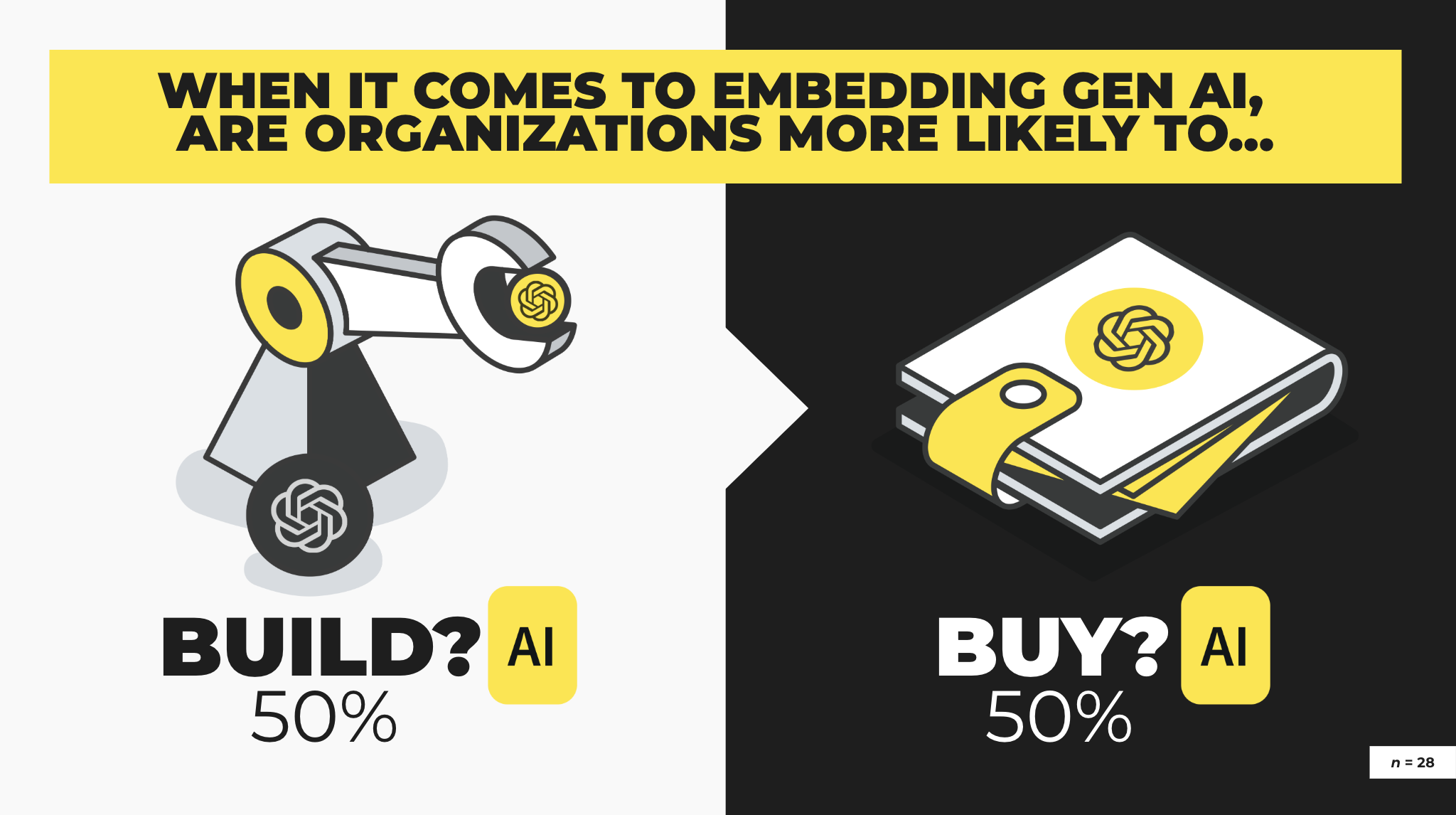

Choosing whether to build GenAI capabilities in-house or buy ready-made off the shelf will continue to be a big talking point in 2025. In an intriguing outcome, when asked whether their organization was more likely to build their own capabilities or buy off the shelf, respondents in our study were split 50/50 (Figure 6).

This reveals that the ‘right’ approach depends heavily on context. There are times when building will make sense and other times when buying will prove the better option.

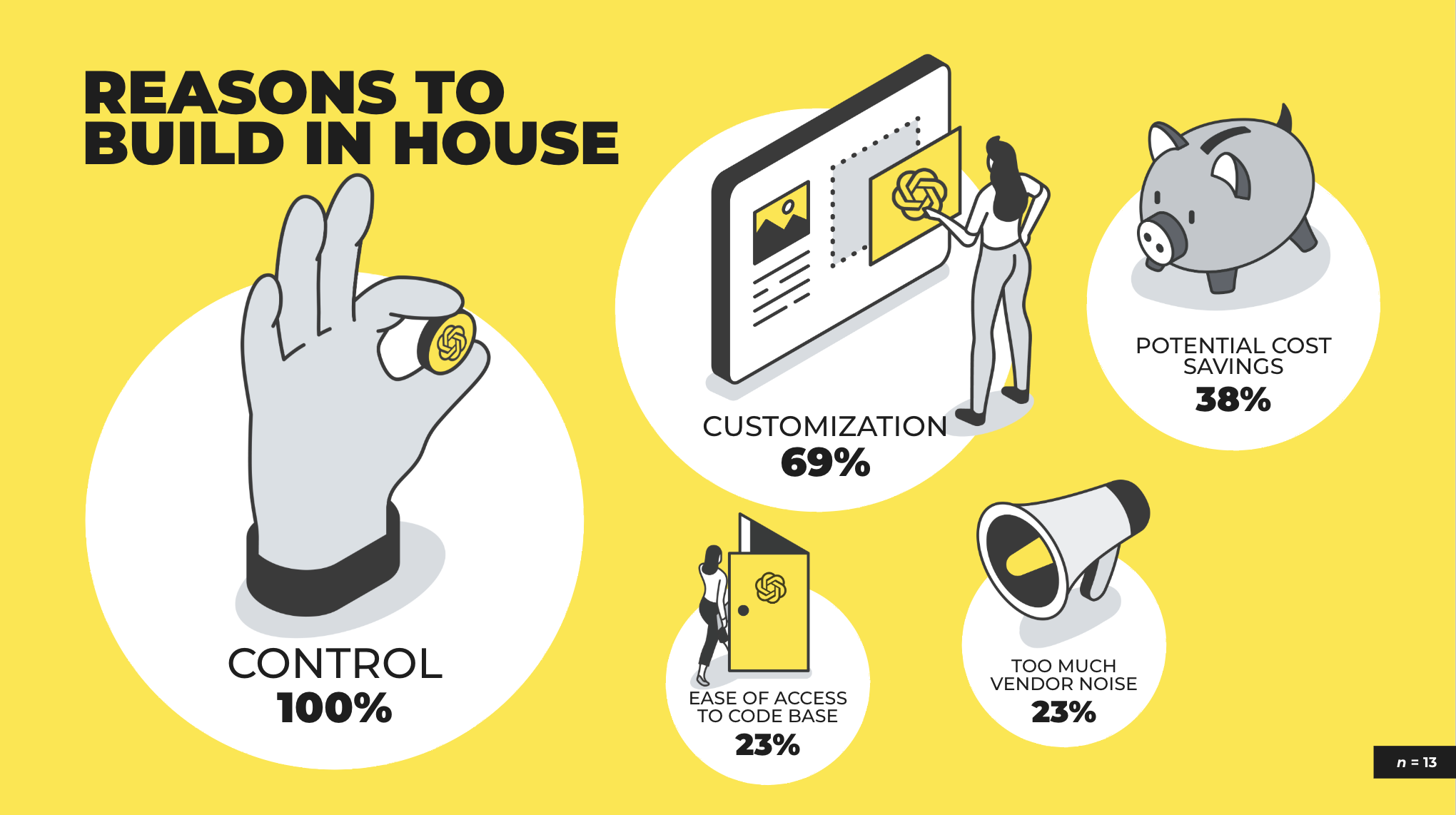

Based on whether they would more likely build or buy, we then asked our community to explain the reasons behind their preference. Those newsrooms planning to build their own GenAI capabilities overwhelmingly point to advantages around better control over what they create e.g. when it comes to handling data, as well as the ability to customize tools - suggesting nuanced needs and requirements (Figure 7).

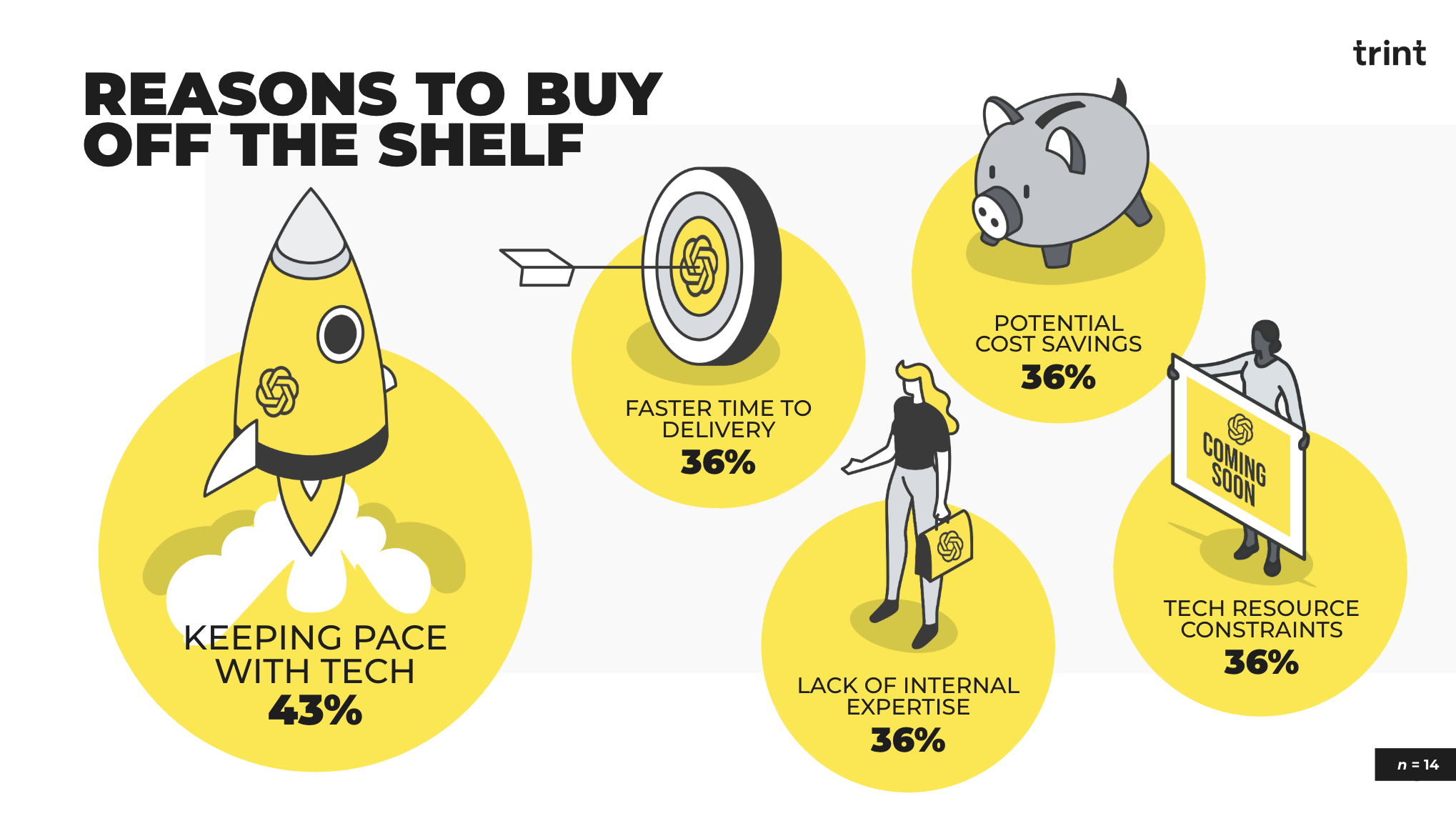

On the flip side, those who expect to buy highlight not only exploiting a vendor's position at the forefront of technological advancements, but also their own lack of technical expertise and resources (Figure 8). There is clearly a significant level of ongoing investment needed when building tools and, for those newsrooms that cannot maintain the upkeep, buying ready-made capabilities will make more sense.

As far as 2025 goes, those organizations that have a very bespoke requirement - as well as in-house expertise and resources ready to go - should build their own capabilities. However, those newsrooms with technical resource constraints will likely benefit from a vendor’s expertise and knowledge.

The overarching recommendation is for newsrooms to make sure they fully understand their in-house technical expertise levels, resource availability, as well as budgets and timelines, before making a decision either way.